All is Cubes progress report

Tuesday, October 13th, 2020 10:50I woke up last night with a great feature idea which on further examination was totally inapplicable to All is Cubes in its current state. (I was for some dream-ish reason imagining top-down tile- and turn-based movement, and had the idea to change the cursor depending on whether a click was a normal move or an only-allowed-because-we're-debugging teleport. This is totally unlike anything I've done yet and makes no sense for a first-person free movement game. But I might think about cursor/crosshair changes to signal what clicking on a block would do.)

That seems like a good excuse to write a status update. Since my last post I've made significant progress, but there are still large missing pieces compared to the original JavaScript version.

(The hazard in any rewrite, of course, is second-system effect — “we know what mistakes we made last time, so let's make zero of them this time”, with the result that you add both constraints and features, and overengineer the second version until you have a complex system that doesn't work. I'm trying to pay close attention to signs of overconstraint.)

Now done:

There's a web server you can run (

aic-server) that will serve the web app version; right now it's just static files with no client-server features.Recursive blocks exist, and they can be rendered both in the WebGL and raytracing modes.

There's an actual camera/character component, so we can have perspective projection, WASD movement (but not yet mouselook), and collision.

For collision, right now the body is considered a point, but I'm in the middle of adding axis-aligned box collisions. I've improved on the original implementation in that I'm using the raycasting algorithm rather than making three separate axis-aligned moves, so we have true “continuous collision detection” and fast objects will never pass through walls or collide with things that aren't actually in their path.

You can click on blocks to remove them (but not place new ones).

Most of the lighting algorithm from the original, with the addition of RGB color.

Also new in this implementation,

Spacehas an explicit field for the “sky color” which is used both for rendering and for illuminating blocks from outside the bounds. This actually reduces the number of constants used in the code, but also gets us closer to “physically based rendering”, and allows having “night” scenes without needing to put a roof over everything. (I expect to eventually generalize from a single color to a skybox of some sort, for outdoor directional lighting and having a visible horizon, sun, or other decorative elements.)Rendering space in chunks instead of a single list of vertices that has to be recomputed for every change.

Added a data structure (

EvaluatedBlock) for caching computed details of blocks like whether their faces are opaque, and used it to correctly implement interior surface removal and lighting. This will also be critical for efficiently supporting things like rotated variants of blocks. (In the JS version, theBlocktype was a JS object which memoized this information, but here,Blockis designed to be lightweight and copiable (because I've replaced having aBlocksetdefining numeric IDs with passing around the actualBlockand letting theSpacehandle allocating IDs), so it's less desirable to be storing computed values inBlock.)Made nearly all of the GL/luminance rendering code not wasm-specific. That way, we can support "desktop application" as an option if we want to (I might do this solely for purposes of being able to graphically debug physics tests) and there is less code that can only be compiled with the wasm cross-compilation target.

Integrated embedded_graphics to allow us to draw text (and other 2D graphics) into voxels. (That library was convenient because it came with fonts and because it allows implementing new drawing targets as the minimal interface "write this color (whatever you mean by color) to the pixel at these coordinates".) I plan to use this for building possibly the entire user interface out of voxels — but for now it's also an additional tool for test content generation.

Still to do that original Cubes had:

- Mouselook/pointer lock.

- Block selection UI and placement.

- Any UI at all other than movement and targeting blocks. I've got ambitious plans to build the UI itself out of blocks, which both fits the "recursive self-defining blocks" theme and means I can do less platform-specific UI code (while running headlong down the path of problematically from-scratch inaccessible video game UI).

- Collision with recursive subcubes rather than whole cubes (so slopes/stairs and other smaller-than-an-entire-cube blocks work as expected).

- Persistence (saving to disk).

- Lots and lots of currently unhandled edge cases and "reallocate this buffer bigger" cases.

Stuff I want to do that's entirely new:

- Networking; if not multiplayer, at least the web client saves its world data to a server. I've probably already gone a bit too far down the path of writing a data model without consideration for networking.

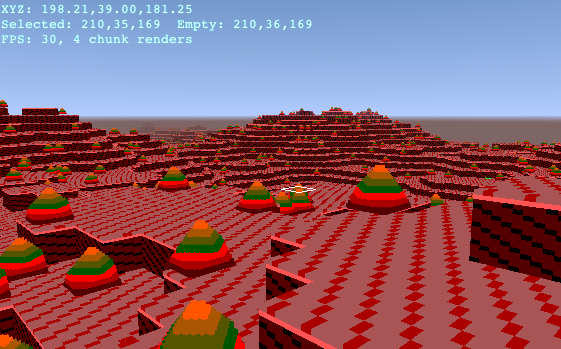

![[screenshot]](https://switchb.org/kpreid/2020/all-is-cubes-10-13-progress.png)

![[console screenshot]](https://switchb.org/kpreid/2020/all-is-cubes-first-console.png)

![[WebGL screenshot]](https://switchb.org/kpreid/2020/all-is-cubes-first-webgl.png)

When I wrote

When I wrote